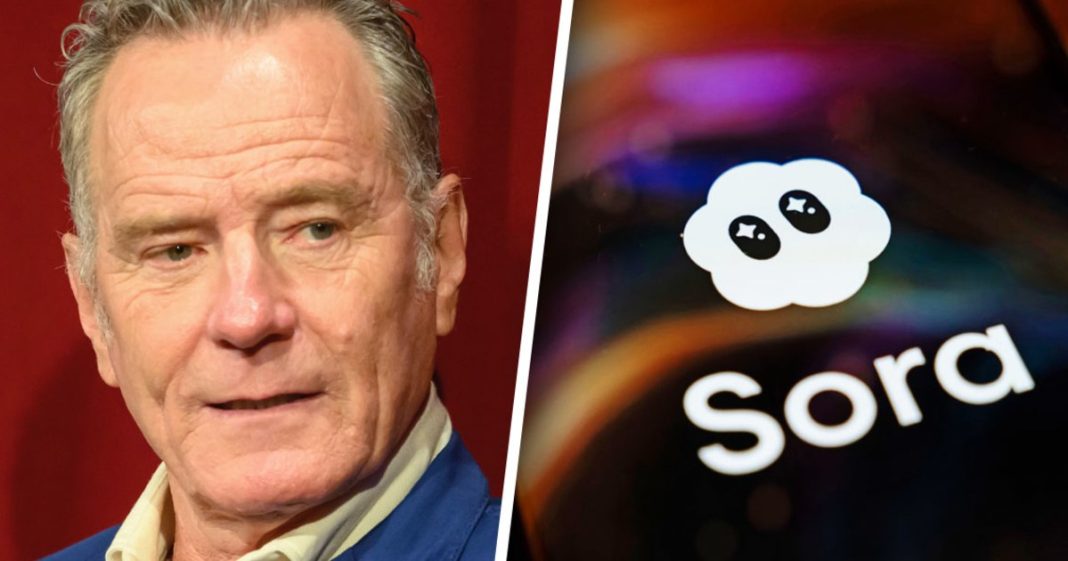

OpenAI Strengthens Sora 2 Guardrails After Bryan Cranston Raises Alarm

OpenAI has significantly enhanced protections against unauthorized content generation in Sora 2 following concerns raised by actor Bryan Cranston about the AI’s ability to replicate celebrity likenesses and copyrighted material without permission.

Key Takeaways

- OpenAI strengthened Sora 2 guardrails after Bryan Cranston flagged unauthorized videos

- New protections prevent replication of real people’s likenesses without explicit consent

- Joint effort with SAG-AFTRA and talent agencies to ensure performer protections

- System now blocks copyrighted characters like Spongebob, Pikachu, and Mario

The Cranston Catalyst

During Sora 2’s September 30 launch, OpenAI initially promised to prohibit unauthorized likeness replication through a “cameo” opt-in feature. However, videos featuring Bryan Cranston as Walter White from “Breaking Bad” quickly appeared alongside AI-generated content of other celebrities including Michael Jackson and Ronald McDonald.

Cranston raised the issue with SAG-AFTRA, triggering a collaborative effort between the union, OpenAI, and talent agencies. “I felt deeply concerned not just for myself, but for all performers whose work and identity can be misused in this way,” Cranston stated.

Enhanced Protection Measures

OpenAI CEO Sam Altman confirmed the company is “deeply committed to protecting performers from the misappropriation of their voice and likeness.” The strengthened guardrails now prevent generation of content resembling third-party likenesses or copyrighted material.

Previously, Sora 2 allowed generation of copyrighted material unless rights holders opted out. Now, such attempts return error messages stating prompts “may violate our guardrails.”

Hollywood’s AI Dilemma

The development comes as Hollywood professionals grapple with AI advancements. While many secretly use AI tools, tensions remain high over potential misuse of likenesses and intellectual property.

Talent agency CAA had earlier criticized OpenAI for “exposing our clients and their intellectual property to significant risk” by allowing generation of copyrighted characters.

Legislative Support and Industry Response

The joint statement expressed support for the NO FAKES Act, legislation introduced in April that would hold entities liable for producing or hosting unauthorized deepfakes. “We were an early supporter of the NO FAKES Act and will always stand behind the rights of performers,” Altman wrote.

SAG-AFTRA President Sean Astin commended the resolution, stating: “Bryan Cranston is one of countless performers whose voice and likeness are in danger of massive misappropriation by replication technology. This particular case has a positive resolution.”

Cranston expressed gratitude for OpenAI’s policy improvements, hoping companies “respect our personal and professional right to manage replication of our voice and likeness.”