India Takes First Step to Regulate Artificial Intelligence

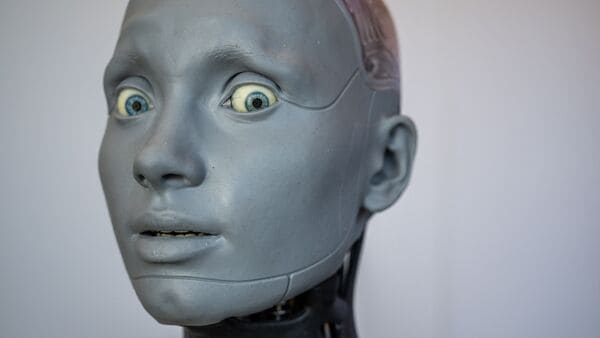

India has introduced its first regulatory framework for artificial intelligence, requiring social media platforms to mandate users declare AI-generated or altered content. The draft rules aim to curb the rising threat of deepfakes and synthetic media.

Key Takeaways

- Social media platforms must ensure users label AI-generated content

- Companies must display AI watermarks covering at least 10% of content

- Failure to comply may result in loss of safe harbor protection

- Stakeholders have until November 6 to provide feedback

New Accountability Framework

The Ministry of Electronics and Information Technology (Meity) proposed amendments to the Information Technology Rules, 2021, reflecting growing concerns about deepfakes – fabricated content mimicking a person’s appearance, voice, or mannerisms.

Union IT Minister Ashwini Vaishnaw stated the amendment “raises the level of accountability” for users, companies, and government as deepfake content volumes increase. Enforcement will be handled by joint secretary-level officers at the central government and DIG-level officers for police reports.

Industry Response and Implementation

A government official confirmed consultation with top AI companies, who indicated metadata can identify AI-altered content. The obligation to identify and report deepfakes will lie with companies, not users, and AI content will become part of social media community guidelines.

While Big Tech platforms enforce safety measures, Google’s Gemini 2.5 Flash model has heightened concerns due to its ability to create realistic duplicate images. YouTube has expanded trials to detect AI-generated content using creator data and internal algorithms.

Growing Deepfake Threat

Gartner research revealed 62% of 302 enterprise cybersecurity executives reported their organizations faced AI deepfake attacks cloning executives’ voices or appearances.

“The proposed amendments to the IT Rules are a significant step in India’s evolving approach to AI governance,” said Dhruv Garg of India Governance and Policy Project.

Garg emphasized the need to balance authenticity and accountability with freedom of speech, warning against provisions that could restrict legitimate creative uses of synthetic media.

Legal Framework and Future Steps

Senior counsel N.S. Nappinai noted existing laws provide avenues for criminal and civil lawsuits against deepfake attacks, but suggested standalone AI laws with specific criminal provisions would be more effective deterrents.

The parliamentary standing committee on home affairs had recommended in August that Meity develop a technological framework mandating watermarks on all shared media content, with Cert-In coordinating monitoring and detection alerts.

Recent Cases and Concerns

The Delhi High Court issued interim orders protecting film producer Karan Johar and actor Aishwarya Rai Bachchan from AI-generated deepfake misuse. Union Finance Minister Nirmala Sitharaman also flagged concerns about rising deepfake videos at the Global Fintech Fest.