Key Takeaways

- Google warns of new AI malware that can think, adapt, and rewrite its own code autonomously.

- Hackers are using social engineering to trick AI models like Gemini into writing malicious code.

- A booming black market for AI hacking tools is making advanced cyberattacks accessible to novices.

Google’s Threat Intelligence Group has revealed a dangerous new era in cybersecurity where AI-powered malware can think and rewrite its own code during an attack, making it highly evasive.

Self-Evolving Malware: PROMPTFLUX and PROMPTSTEAL

Google identified specific malware strains like PROMPTFLUX and PROMPTSTEAL that use Large Language Models (LLMs). These threats generate new malicious scripts every time they execute.

PROMPTFLUX, written in VBScript, sends commands to the Gemini API to request help writing complex, encrypted code designed to bypass antivirus software.

Conversely, PROMPTSTEAL, reportedly used by the Russian APT28 group against Ukraine, disguises itself as an image generation tool. It uses the Qwen model to create commands for stealing local data without any pre-written code.

Hackers Are Now Tricking AI Systems

The report highlights that hackers are using sophisticated social engineering against AI. They use innocent-seeming pretexts, like pretending to be a Capture-the-Flag contestant to get Gemini to suggest vulnerabilities, or claiming to be a student needing coding help for a final project.

This demonstrates a significant shift, as attackers now actively deceive AI systems, not just humans.

The Rapidly Growing Black Market for AI Hacking Tools

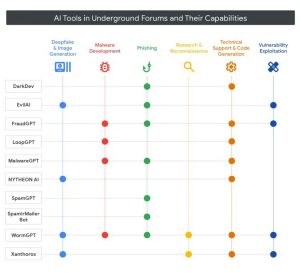

In 2025, the black market for AI-powered hacking tools has exploded. Services like WormGPT, FraudGPT, and LoopGPT are being sold, offering capabilities from writing phishing emails to creating malware and exploiting system vulnerabilities.

This accessibility allows even novice hackers to create highly complex malware. Simultaneously, state-sponsored groups are leveraging these AIs for attack planning, intelligence gathering, and developing sophisticated phishing campaigns and command-and-control servers.

Google’s Counter-Offensive

In response, Google has closed accounts and projects linked to malicious actors and is continuously refining its Gemini models to be smarter and more resistant to misuse.

Google is also collaborating with DeepMind to develop AI tools like Big Sleep and CodeMender, which will automatically detect and patch vulnerabilities. The ultimate goal is to create advanced, safe AI, ensuring responsible use in an age where AI is both a powerful weapon and a crucial shield.

Source: Google